TL;DR: AION-Search is a text-based search engine for galaxy images that was trained on 300k captions generated by GPT-4.1-mini.

Abstract: Finding scientifically interesting phenomena through manual labeling campaigns limits our ability to explore the billions of galaxy images produced by telescopes. In this work, we develop a pipeline to create a semantic search engine from completely unlabeled image data. Our method leverages Vision-Language Models (VLMs) to generate descriptions for galaxy images, then contrastively aligns a pre-trained multimodal astronomy foundation model with these embedded descriptions to produce searchable embeddings at scale. We find that current VLMs provide descriptions that are sufficiently informative to train a semantic search model that outperforms direct image similarity search. Our model, AION-Search, achieves state-of-the-art zero-shot performance on finding rare phenomena despite training on randomly selected images with no deliberate curation for rare cases. Furthermore, we introduce a VLM-based re-ranking method that nearly doubles the recall for our most challenging targets in the top-100 results. For the first time, AION-Search enables flexible semantic search scalable to 140 million galaxy images, enabling discovery from previously infeasible searches. More broadly, our work provides an approach for making large, unlabeled scientific image archives semantically searchable.

Try AION-Search

Search AppExample Searches

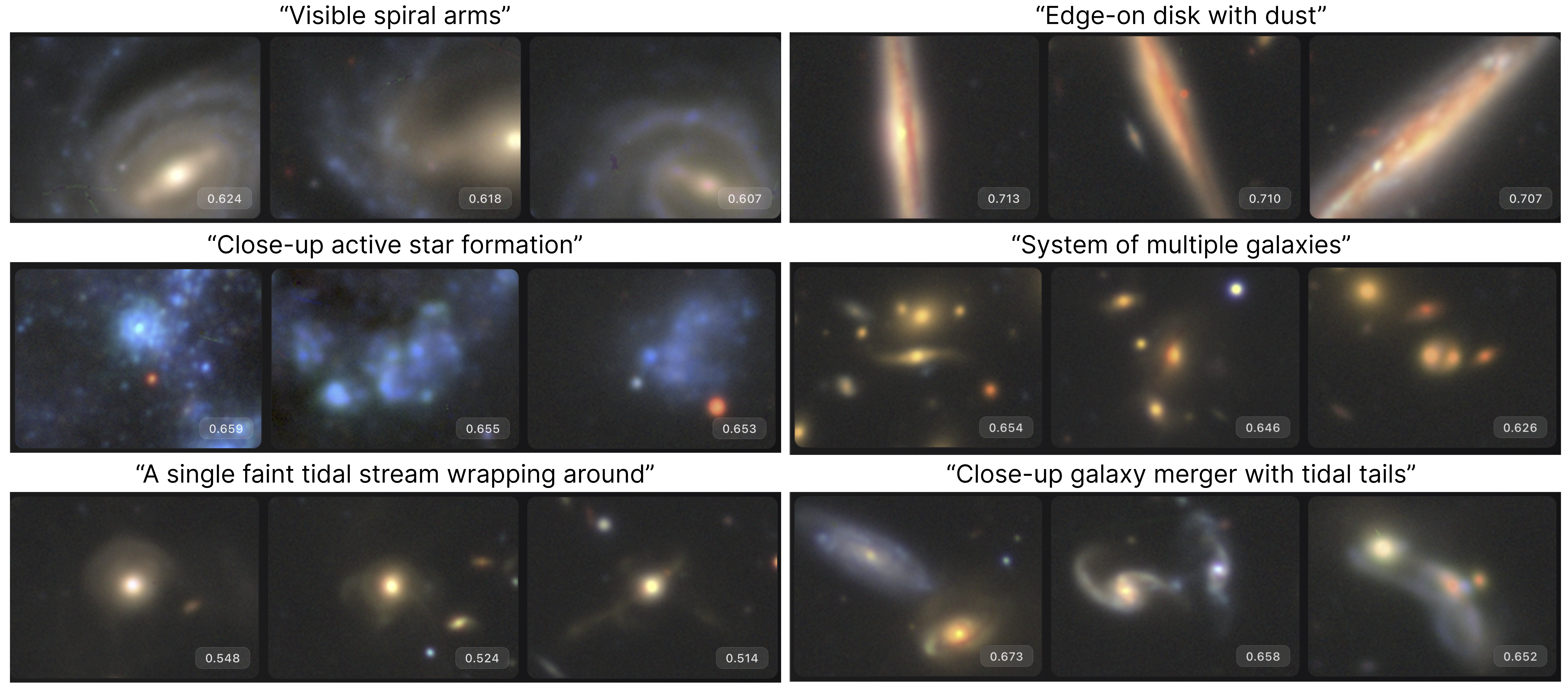

AION-Search enables semantic retrieval of galaxies matching free-form natural language queries. Top three retrieved images from the the Hyper Suprime-Cam (HSC) survey. AION-Search is able to find specific phenomena that would traditionally require volunteer labeling and training a bespoke classifier.

Traditional galaxy classification relies on manual labeling campaigns that require months of effort and have fixed classes. To enable open-vocabulary semantic search, we use vision-language models to caption galaxy images, then train a contrastive learning model to learn a mapping between image and text.

.png)

Evaluating description accuracy versus cost. Left: Vision-language model captions are judged against Galaxy Zoo labels. Right: Model accuracy versus predicted cost for 100k images.

Methodology

We benchmark 13 VLMs on Galaxy Zoo and select GPT-4.1-mini for best performance-cost trade-off. We generate captions for 275k galaxies from Legacy Survey and HSC for ~$150, then train a contrastive learning model using AION, an astronomy foundation model, as the image encoder and OpenAI's text-embedding-3-large as the text encoder. We can then use our model to generate text-searchable embeddings for datasets of images. We evaluate search performance on spiral galaxies (26% of data), mergers (2%), and gravitational lenses (0.1%).

Despite far from being perfect, the captions generated by GPT-4.1-mini are of sufficient quality to train a semantic search model that outperforms direct image similarity search.

Results

In terms of nDCG@10, a retrieval metric for the top-10 retrieved images, AION-Search achieves 0.941 (spirals), 0.554 (mergers), and 0.180 (lenses) vs. best similarity search baselines of 0.643, 0.384, and 0.015 respectively. After a search is performed, we can additionally re-rank the top 1,000 search results with GPT-4.1 by asking it to score each images based on how well it matches the query. This nearly doubles lenses found in top-100 (7→13), with retrieval performance scaling with model size and more samplings per image. This is not yet available in the search app.

.png)

.png)

VLM re-ranking boosts rare object retrieval, with performance improving as model size and number of samplings increase.

Conclusion

We demonstrate that captions generated by vision-language models can enable effective semantic search over unlabeled galaxy images at scale. This approach offers a generalizable framework for making large scientific image archives semantically searchable across multiple domains.

BibTeX

@misc{koblischke2025semantic,

title={Semantic search for 100M+ galaxy images using AI-generated captions},

author={Nolan Koblischke and Liam Parker and Francois Lanusse and Irina Espejo Morales and Jo Bovy and Shirley Ho},

year={2025},

eprint={2512.11982},

archivePrefix={arXiv},

primaryClass={astro-ph.IM},

url={https://arxiv.org/abs/2512.11982},

}